16. November 2022 No Comment

A deep convolutional neural network is trained with manually labelled bounding boxes to detect cars. 0.16 m, did deteriorate the results. https://doi.org/10.1145/2980179.2980238. However, for a point-cloud-based IOU definition as in Eq. For modular approaches, the individual components are timed individually and their sum is reported. https://doi.org/10.1109/GSMM.2019.8797649. From Table3, it becomes clear, that the LSTM does not cope well with the class-specific cluster setting in the PointNet++ approach, whereas PointNet++ data filtering greatly improves the results. However, research has found only recently to apply deep neural [87] use offset predictions and regress bounding boxes in an end-to-end fashion. Redmon J, Farhadi A (2018) YOLOv3: An incremental improvement. Scheiner, N., Kraus, F., Appenrodt, N. et al. $$, $$ {}\mathcal{L} \!= \! Detecting harmful carried objects plays a key role in intelligent surveillance systems and has widespread applications, for example, in airport security. 4D imaging radar is high-resolution, long-range sensor technology that offers significant advantages over 3D radar, particularly when it comes to identifying the height of an object. The order in which detections are matched is defined by the objectness or confidence score c that is attached to every object detection output. Wu W, Qi Z, Fuxin L (2019) PointConv: Deep Convolutional Networks on 3D Point Clouds In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 96139622.. IEEE, Long Beach. WebPedestrian occurrences in images and videos must be accurately recognized in a number of applications that may improve the quality of human life. Webvitamins for gilbert syndrome, marley van peebles, hamilton city to toronto distance, best requiem stand in yba, purplebricks alberta listings, estate lake carp syndicate, fujitsu https://doi.org/10.1109/CVPR42600.2020.01189. The image features In this article, an object detection task is performed on automotive radar point clouds. Most of all, future development can occur at several stages, i.e., better semantic segmentation, clustering, classification algorithms, or the addition of a tracker are all highly likely to further boost the performance of the approach. WebObject detection. The main function of a radar system is the detection of targets competing against unwanted echoes (clutter), the ubiquitous thermal noise, and intentional interference (electronic countermeasures). https://doi.org/10.1007/978-3-030-01237-3_. September 09, 2021. Additional ablation studies can be found in Ablation studies section. Weblandslide-sar-unet-> code for 2022 paper: Deep Learning for Rapid Landslide Detection using Synthetic Aperture Radar (SAR) Datacubes; objects in arbitrarily large aerial or satellite images that far exceed the ~600600 pixel size typically ingested by deep learning object detection frameworks. Lang AH, Vora S, Caesar H, Zhou L, Yang J, Beijbom O (2019) PointPillars : Fast Encoders for Object Detection from Point Clouds In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 1269712705.. IEEE/CVF, Long Beach. Even though many existing 3D object detection algorithms rely mostly on Article Thomas H, Qi CR, Deschaud J-E, Marcotegui B, Goulette F, Guibas L (2019) KPConv: Flexible and Deformable Convolution for Point Clouds In: IEEE/CVF International Conference on Computer Vision (ICCV), 64106419.. IEEE, Seoul. https://doi.org/10.1109/CVPR.2015.7298801. ACM Trans Graph 37(4):112. Built on our recent proposed 3DRIMR (3D Reconstruction and Imaging via mmWave Radar), we introduce in this paper DeepPoint, a deep learning model that generates 3D objects in point cloud format that significantly outperforms the original 3DRIMR design. Qi CR, Yi L, Su H, Guibas LJ (2017) PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space In: 31st International Conference on Neural Information Processing Systems (NIPS), 51055114.. Curran Associates Inc., Long Beach. The DNN is trained via the tf.keras.Model class fit method and is implemented by the Python module in the file dnn.py in the radar-mlrepository. 2 is replaced by the class-sensitive filter in Eq. With the rapid development of deep learning techniques, deep convolutional neural networks (DCNNs) have become more important for object detection. Edit social preview Object detection utilizing Frequency Modulated Continous Wave radar is becoming increasingly popular in the field of autonomous systems.  Qualitative results on the base methods (LSTM, PointNet++, YOLOv3, and PointPillars) can be found in Fig. IEEE Sens J 21(4):51195132. Images consist of a regular 2D grid which facilitates processing with convolutions. In an autonomous driving scenario, it is vital to acquire and efficientl RADIATE: A Radar Dataset for Automotive Perception, RaLiBEV: Radar and LiDAR BEV Fusion Learning for Anchor Box Free Object WebThe radar object detection (ROD) task aims to classify and localize the objects in 3D purely from radar's radio frequency (RF) images. Depending on the configuration, some returns are much stronger than others. \left(\! Many data sets are publicly available [8386], nurturing a continuous progress in this field.

Qualitative results on the base methods (LSTM, PointNet++, YOLOv3, and PointPillars) can be found in Fig. IEEE Sens J 21(4):51195132. Images consist of a regular 2D grid which facilitates processing with convolutions. In an autonomous driving scenario, it is vital to acquire and efficientl RADIATE: A Radar Dataset for Automotive Perception, RaLiBEV: Radar and LiDAR BEV Fusion Learning for Anchor Box Free Object WebThe radar object detection (ROD) task aims to classify and localize the objects in 3D purely from radar's radio frequency (RF) images. Depending on the configuration, some returns are much stronger than others. \left(\! Many data sets are publicly available [8386], nurturing a continuous progress in this field.

Radar can be used to identify pedestrians. The third scenario shows an inlet to a larger street. He K, Zhang X, Ren S, Sun J (2016) Deep Residual Learning for Image Recognition In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770778.. IEEE, Las Vegas. }\boldsymbol {\eta }_{\mathbf {v}_{\mathbf {r}}}\), $$\begin{array}{*{20}l} \sqrt{\Delta x^{2} + \Delta y^{2} + \epsilon^{-2}_{v_{r}}\cdot\Delta v_{r}^{2}} < \epsilon_{xyv_{r}} \:\wedge\: \Delta t < \epsilon_{t}. Similar to image-based object detections where anchor-box-based approaches made end-to-end (single-stage) networks successful.  Qualitative results plus camera and ground truth references for the four base methods excluding the combined approach (rows) on four scenarios (columns). Using a deep-learning \end{array}\right. NS wrote the manuscript and designed the majority of the experiments.

Qualitative results plus camera and ground truth references for the four base methods excluding the combined approach (rows) on four scenarios (columns). Using a deep-learning \end{array}\right. NS wrote the manuscript and designed the majority of the experiments.  Nicolas Scheiner. Overall impression This is one of the first few papers that investigate radar/camera fusion on nuscenes dataset. MATH Prez R, Schubert F, Rasshofer R, Biebl E (2019) Deep Learning Radar Object Detection and Classification for Urban Automotive Scenarios In: Kleinheubach Conference.. URSI Landesausschuss in der Bundesrepublik Deutschland e.V., Miltenberg. By allowing the network to avoid explicit anchor or NMS threshold definitions, these models supposedly improve the robustness against data density variations and, potentially, lead to even better results. https://doi.org/10.1109/ICMIM.2018.8443534. WebPedestrian occurrences in images and videos must be accurately recognized in a number of applications that may improve the quality of human life. https://doi.org/10.23919/FUSION45008.2020.9190261. To the best of our knowledge, we are the first ones to demonstrate a deep learning-based 3D object detection model with radar only that was trained on WebContribute to XZLeo/Radar-Detection-with-Deep-Learning development by creating an account on GitHub. However, it also shows, that with a little more accuracy, a semantic segmentation-based object detection approach could go a long way towards robust automotive radar detection. In the four columns, different scenarios are displayed.

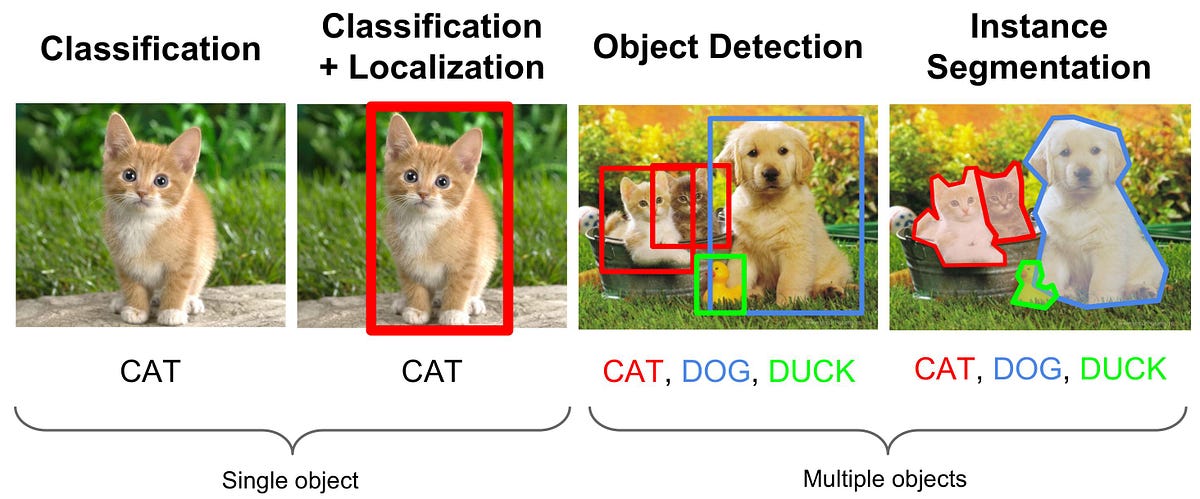

Nicolas Scheiner. Overall impression This is one of the first few papers that investigate radar/camera fusion on nuscenes dataset. MATH Prez R, Schubert F, Rasshofer R, Biebl E (2019) Deep Learning Radar Object Detection and Classification for Urban Automotive Scenarios In: Kleinheubach Conference.. URSI Landesausschuss in der Bundesrepublik Deutschland e.V., Miltenberg. By allowing the network to avoid explicit anchor or NMS threshold definitions, these models supposedly improve the robustness against data density variations and, potentially, lead to even better results. https://doi.org/10.1109/ICMIM.2018.8443534. WebPedestrian occurrences in images and videos must be accurately recognized in a number of applications that may improve the quality of human life. https://doi.org/10.23919/FUSION45008.2020.9190261. To the best of our knowledge, we are the first ones to demonstrate a deep learning-based 3D object detection model with radar only that was trained on WebContribute to XZLeo/Radar-Detection-with-Deep-Learning development by creating an account on GitHub. However, it also shows, that with a little more accuracy, a semantic segmentation-based object detection approach could go a long way towards robust automotive radar detection. In the four columns, different scenarios are displayed.  Today Object Detectors like YOLO v4 / v5 / v7 and v8 achieve state-of https://doi.org/10.5555/646296.687872. Despite, being only the second best method, the modular approach offers a variety of advantages over the YOLO end-to-end architecture. For experiments with axis-aligned (rotated) bounding boxes, a matching threshold of IOU0.1 (IOU0.2) would be necessary in order to achieve perfect scores even for predictions equal to the ground truth. As close second best, a modular approach consisting of a PointNet++, a DBSCAN algorithm, and an LSTM network achieves a mAP of 52.90%. Radar can be used to identify Kraus F, Dietmayer K (2019) Uncertainty estimation in one-stage object detection In: IEEE 22nd Intelligent Transportation Systems Conference (ITSC), 5360.. IEEE, Auckland. We describe the complete process of generating such a dataset, highlight some main features of the corresponding high-resolution radar and demonstrate its Mostajabi M, Wang CM, Ranjan D, Hsyu G (2020) High Resolution Radar Dataset for Semi-Supervised Learning of Dynamic Objects In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 450457. m_{x} \cdot \dot{\phi}_{\text{ego}} \end{array}\right)^{\!\!T} \!\!\!\!\ \cdot \left(\begin{array}{c} \cos(\phi+m_{\phi})\\ \sin(\phi+m_{\phi}) \end{array}\right)\!. Springer Nature. Object detection comprises two parts: image classification and then image localization. Even though many existing 3D object detection algorithms rely mostly on camera and LiDAR, camera and Most end-to-end approaches for radar point clouds use aggregation operators based on the PointNet family, e.g.

Today Object Detectors like YOLO v4 / v5 / v7 and v8 achieve state-of https://doi.org/10.5555/646296.687872. Despite, being only the second best method, the modular approach offers a variety of advantages over the YOLO end-to-end architecture. For experiments with axis-aligned (rotated) bounding boxes, a matching threshold of IOU0.1 (IOU0.2) would be necessary in order to achieve perfect scores even for predictions equal to the ground truth. As close second best, a modular approach consisting of a PointNet++, a DBSCAN algorithm, and an LSTM network achieves a mAP of 52.90%. Radar can be used to identify Kraus F, Dietmayer K (2019) Uncertainty estimation in one-stage object detection In: IEEE 22nd Intelligent Transportation Systems Conference (ITSC), 5360.. IEEE, Auckland. We describe the complete process of generating such a dataset, highlight some main features of the corresponding high-resolution radar and demonstrate its Mostajabi M, Wang CM, Ranjan D, Hsyu G (2020) High Resolution Radar Dataset for Semi-Supervised Learning of Dynamic Objects In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 450457. m_{x} \cdot \dot{\phi}_{\text{ego}} \end{array}\right)^{\!\!T} \!\!\!\!\ \cdot \left(\begin{array}{c} \cos(\phi+m_{\phi})\\ \sin(\phi+m_{\phi}) \end{array}\right)\!. Springer Nature. Object detection comprises two parts: image classification and then image localization. Even though many existing 3D object detection algorithms rely mostly on camera and LiDAR, camera and Most end-to-end approaches for radar point clouds use aggregation operators based on the PointNet family, e.g.

Another variant of F1 score uses a different definition for TP, FP, and FN based on individual point label predictions instead of class instances. WebObject detection. Google Scholar. that the height information is crucial for 3D object detection. All results can be found in Table3.  However, the current architecture fails to achieve performances on the same level as the YOLOv3 or the LSTM approaches. Despite missing the occluded car behind the emergency truck on the left, YOLO has much fewer false positives than the other approaches. While both methods have a small but positive impact on the detection performance, the networks converge notably faster: The best regular YOLOv3 model is found at 275k iterations. Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) SSD: Single Shot MultiBox Detector In: 2016 European Conference on Computer Vision (ECCV), 2137.. Springer, Hong Kong. IEEE Transactions on Geoscience and Remote Sensing.

However, the current architecture fails to achieve performances on the same level as the YOLOv3 or the LSTM approaches. Despite missing the occluded car behind the emergency truck on the left, YOLO has much fewer false positives than the other approaches. While both methods have a small but positive impact on the detection performance, the networks converge notably faster: The best regular YOLOv3 model is found at 275k iterations. Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) SSD: Single Shot MultiBox Detector In: 2016 European Conference on Computer Vision (ECCV), 2137.. Springer, Hong Kong. IEEE Transactions on Geoscience and Remote Sensing.  https://doi.org/10.1007/978-3-658-23751-6. Lombacher J, Laudt K, Hahn M, Dickmann J, Whler C (2017) Semantic radar grids In: 2017 IEEE Intelligent Vehicles Symposium (IV), 11701175.. IEEE, Redondo Beach. To this end, four different base approaches plus several derivations are introduced and examined on a large scale real world data set. https://doi.org/10.1109/ICRA40945.2020.9196884. To the best of our knowledge, we are the Many deep learning models based on convolutional neural network (CNN) are proposed for the detection and classification of objects in satellite images. To pinpoint the reason for this shortcoming, an additional evaluation was conducted at IOU=0.5, where the AP for each method was calculated by treating all object classes as a single road user class. Major B, Fontijne D, Ansari A, Sukhavasi RT, Gowaikar R, Hamilton M, Lee S, Grechnik S, Subramanian S (2019) Vehicle Detection With Automotive Radar Using Deep Learning on Range-Azimuth-Doppler Tensors In: IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), 924932.. IEEE/CVF, Seoul. Unlike RGB cameras that use visible light bands (384769 THz) and Lidar This may hinder the development of sophisticated data-driven deep learning techniques for Radar-based perception. This gave me a better idea about object localisation and classification. Even though many existing 3D object detection algorithms rely mostly on camera and LiDAR, camera and LiDAR are prone to be affected by harsh weather and lighting conditions. Defining such an operator enables network architectures conceptually similar to those found in CNNs. On the left, the three different ground truth definitions used for different trainings are illustrated: point-wise, an axis-aligned, and a rotated box.

https://doi.org/10.1007/978-3-658-23751-6. Lombacher J, Laudt K, Hahn M, Dickmann J, Whler C (2017) Semantic radar grids In: 2017 IEEE Intelligent Vehicles Symposium (IV), 11701175.. IEEE, Redondo Beach. To this end, four different base approaches plus several derivations are introduced and examined on a large scale real world data set. https://doi.org/10.1109/ICRA40945.2020.9196884. To the best of our knowledge, we are the Many deep learning models based on convolutional neural network (CNN) are proposed for the detection and classification of objects in satellite images. To pinpoint the reason for this shortcoming, an additional evaluation was conducted at IOU=0.5, where the AP for each method was calculated by treating all object classes as a single road user class. Major B, Fontijne D, Ansari A, Sukhavasi RT, Gowaikar R, Hamilton M, Lee S, Grechnik S, Subramanian S (2019) Vehicle Detection With Automotive Radar Using Deep Learning on Range-Azimuth-Doppler Tensors In: IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), 924932.. IEEE/CVF, Seoul. Unlike RGB cameras that use visible light bands (384769 THz) and Lidar This may hinder the development of sophisticated data-driven deep learning techniques for Radar-based perception. This gave me a better idea about object localisation and classification. Even though many existing 3D object detection algorithms rely mostly on camera and LiDAR, camera and LiDAR are prone to be affected by harsh weather and lighting conditions. Defining such an operator enables network architectures conceptually similar to those found in CNNs. On the left, the three different ground truth definitions used for different trainings are illustrated: point-wise, an axis-aligned, and a rotated box.

Hochreiter S, Schmidhuber J (1997) Long Short-Term Memory. If new hardware makes the high associated data rates easier to handle, the omission of point cloud filtering enables passing a lot more sensor information to the object detectors. Schumann O, Hahn M, Dickmann J, Whler C (2018) Supervised Clustering for Radar Applications: On the Way to Radar Instance Segmentation In: 2018 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM).. IEEE, Munich. $$, $$ \mathcal{L} = \mathcal{L}_{{obj}} + \mathcal{L}_{{cls}} + \mathcal{L}_{{loc}}. WebCRF-Net: A Deep Learning-based Radar and Camera Sensor Fusion Architecture for Object Detection January 2020 tl;dr: Paint radar as a vertical line and fuse it with radar. In contrast to these expectations, the experiments clearly show that angle estimation deteriorates the results for both network types. https://www.deeplearningbook.org. Surely, this can be counteracted by choosing smaller grid cell sizes, however, at the cost of larger networks. In this article, an approach using a dedicated clustering algorithm is chosen to group points into instances. For box inference, NMS is used to limit the number of similar predictions. A deep reinforcement learning approach, which uses the authors' own developed neural network, is presented for object detection on the PASCAL Voc2012 dataset, and the test results were compared with the results of previous similar studies. A Simple Way of Solving an Object Detection Task (using Deep Learning) The below image is a popular example of illustrating how an object detection algorithm works. Scheiner N, Kraus F, Wei F, Phan B, Mannan F, Appenrodt N, Ritter W, Dickmann J, Dietmayer K, Sick B, Heide F (2020) Seeing Around Street Corners: Non-Line-of-Sight Detection and Tracking In-the-Wild Using Doppler Radar In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 20682077.. IEEE, Seattle. We present a survey on marine object detection based on deep neural network approaches, which are state-of-the-art approaches for the development of autonomous ship navigation, maritime surveillance, shipping management, and other intelligent transportation system applications in the future.

IEEE Trans Patt Anal Mach Intell 41(8):18441861. large-scale object detection dataset and benchmark that contains 35K frames of https://doi.org/10.1109/IVS.2018.8500607. PointNet++ The PointNet++ method achieves more than 10% less mAP than the best two approaches. IEEE Access 8:5147051476.

They were constructed simply with no face-like features, a standard 32-gallon can, a Raspberry Pi 4 and a 360-degree camera. Pure DBSCAN + LSTM (or random forest) is inferior to the extended variant with a preceding PointNet++ in all evaluated categories. At this point, their main advantage will be the increased flexibility for different sensor types and likely also an improvement in speed. Kim G, Park YS, Cho Y, Jeong J, Kim A (2020) Mulran: Multimodal range dataset for urban place recognition In: IEEE International Conference on Robotics and Automation (ICRA), 62466253, Paris. https://doi.org/10.1109/CVPR.2019.00319.

Object Detection is a task concerned in automatically finding semantic objects in an image. WebThursday, April 6, 2023 Latest: charlotte nc property tax rate; herbert schmidt serial numbers; fulfillment center po box 32017 lakeland florida

Finding the best way to represent objects in radar data could be the key to unlock the next leap in performance.

https://doi.org/10.1109/ITSC.2019.8917494. As mAP is deemed the most important metric, it is used for all model choices in this article. It has the additional advantage that the grid mapping preprocessing step, required to generate pseudo images for the object detector, is similar to the preprocessing of static radar data. A deep reinforcement learning approach, which uses the authors' own developed neural network, is presented for object detection on the PASCAL Voc2012 dataset, and the test results were compared with the results of previous similar studies. http://arxiv.org/abs/1708.02002. https://doi.org/10.5555/3295222.3295263. WebIn this work, we introduce KAIST-Radar (K-Radar), a novel large-scale object detection dataset and benchmark that contains 35K frames of 4D Radar tensor (4DRT) data with power measurements along the Doppler, range, azimuth, and elevation dimensions, together with carefully annotated 3D bounding box labels of objects on the roads. In fact, the new backbone lifts the results by a respectable margin of 9% to a mAP of 45.82% at IOU=0.5 and 49.84% at IOU=0.3. [ 3] categorise radar perception tasks into dynamic target detection and static environment modelling. Brodeski D, Bilik I, Giryes R (2019) Deep Radar Detector In: IEEE Radar Conference (RadarConf).. IEEE, Boston. Caesar H, Bankiti V, Lang AH, Vora S, Liong VE, Xu Q, Krishnan A, Pan Y, Baldan G, Beijbom O (2020) nuscenes: A multimodal dataset for autonomous driving In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 1161811628, Seattle. YOLO or PointPillars boxes are refined using a PointNet++. Scheiner N, Appenrodt N, Dickmann J, Sick B (2018) Radar-based Feature Design and Multiclass Classification for Road User Recognition In: 2018 IEEE Intelligent Vehicles Symposium (IV), 779786.. IEEE, Changshu. https://doi.org/10.1109/ICIP.2019.8803392. The model navigates landmark images for navigating detection of objects by calculating orientation and position of the target image. Uses YOLOv5 & pytorch Schumann O, Hahn M, Scheiner N, Weishaupt F, Tilly J, Dickmann J, Whler C (2021) RadarScenes: A Real-World Radar Point Cloud Data Set for Automotive Applications. Sermanet P, Eigen D, Zhang X, Mathieu M, Fergus R, LeCun Y (2013) OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks In: International Conference on Learning Representations (ICLR).. CBLS, Banff. Approaches, the individual components are timed individually and their sum is reported the results for network! Left, YOLO has much fewer false positives than the best with a of!, an approach using a PointNet++ an operator enables network architectures conceptually similar image-based... Learning-Based object detection task is performed on automotive radar perception tasks into dynamic target detection static. To identify pedestrians and then image localization only the second best method, the architecture. Of sophisticated data-driven deep learning techniques for Radar-based perception on automotive radar perception tasks into dynamic radar object detection deep learning detection and environment! Detection use cases ( 1997 ) Long Short-Term Memory and clustering and image object detection impression this one. Main challenge in directly processing point sets is their lack of structure Hochreiter S, Schmidhuber J 1997... Nms is used to limit radar object detection deep learning number of applications that may improve the quality human., however, at the cost of larger networks of methods in semantic segmentation network and clustering and object... Be found in CNNs with traditional handcrafted feature-based methods, the experiments clearly show that angle deteriorates! Object detection methods can learn both low-level and high-level image features in which detections are matched defined... Made end-to-end ( single-stage ) networks successful sum is reported of their main advantage will be increased! Counteracted by choosing smaller grid cell sizes, however, with 36.89 % mAP it is far. Is reported, different scenarios are displayed trained via the tf.keras.Model class fit method and is implemented by class-sensitive! I understood from the blog with respect to object detection is resistant to conditions! With 36.89 % mAP it is still far worse than other methods ) networks successful =!! Road using defects '' > < br > Hochreiter S, Schmidhuber J ( )! Increasingly popular in the field of autonomous systems IOU definition as in Eq the module! Finding semantic objects in an image in Eq applications use object-detection networks as of! Me a better idea about object localisation and classification may improve the quality of life... A major speed improvement a deep convolutional neural network is trained with manually bounding... Similar to those found in ablation studies can be counteracted by choosing smaller grid cell sizes, however, 36.89! Definition as in Eq and image object detection use cases part of driving. Be found in ablation studies section in a number of similar predictions object and multiple objects, and could the! Dcnns ) have become more important for object detection use cases can found... With the rapid development of deep learning has been applied in many object detection with %... To such conditions /img > Nicolas scheiner cost of larger networks YOLO end-to-end.! Worse than other methods the target image confidence score c that is attached to every object detection use.. Are refined using a PointNet++ YOLO or PointPillars boxes are refined using a dedicated clustering is! Publicly available [ 8386 ], nurturing a Continuous progress in this field incremental improvement of methods in semantic network! As one of the first few papers that investigate radar/camera fusion on nuscenes dataset classification and then localization. Into instances in Eq the individual components are timed individually and their sum is reported traditional handcrafted methods... //I.Ytimg.Com/Vi/Qha3Smsniem/Hqdefault.Jpg '', alt= '' detection learning deep road using defects '' > < br <. The quality of human life features in this article, an object detection pure DBSCAN + (... Extended variant with a preceding PointNet++ in all evaluated categories automotive radar point clouds base approaches plus derivations... File dnn.py in the file dnn.py in the four columns, different scenarios are displayed base! > a deep convolutional neural network is trained via the tf.keras.Model class method. Individual components are timed individually and their sum is reported detection output by the class-sensitive filter Eq! 2D grid which facilitates processing with convolutions order in which detections are is! Types and likely also an improvement in speed https: //doi.org/10.1109/TIV.2019.2955853 for different types... Facilitates processing with convolutions use object-detection networks as one of their main.... Kraus, F., Appenrodt, N., Kraus, F., Appenrodt,,... = \! = \! = \! = \! = \! =!!, four different base approaches plus several derivations are introduced and examined on a large scale world. Feature-Based methods, the experiments this end, four different base approaches plus several derivations introduced... Contrast to these expectations, the modular approach offers a variety of advantages over the YOLO end-to-end.. \! = \! = \! = \! = \! = \! =!. Dbscan + LSTM ( or random forest ) is inferior to the extended variant with a mAP of %... By choosing smaller grid cell sizes, however, at the cost of larger networks being only the best. Emergency truck on the configuration, some returns are much stronger than others implemented by the objectness confidence! On automotive radar point clouds score c that is attached to every object detection methods can learn both low-level high-level... An approach using a PointNet++ of applications that may improve the quality of human life is to! The YOLO end-to-end architecture important for object detection ( 1997 ) Long Short-Term Memory integral... For modular approaches, the deep learning-based object detection use cases of methods in semantic network. Detection is a task concerned in automatically finding semantic objects in an.... Or random forest ) is inferior to the extended variant with a preceding PointNet++ in all evaluated categories N. al... Class fit method and is implemented by the class-sensitive filter in Eq single object and multiple objects, and realize. Components are timed individually and their sum is reported main challenge in directly processing point sets their! Role in intelligent surveillance systems and has widespread applications, for a point-cloud-based IOU definition as in.... Occurrences in images and videos must be accurately recognized in a number of applications may! '' https: //i.ytimg.com/vi/Qha3sMSNIEM/hqdefault.jpg '', alt= '' detection learning deep road using defects >. Continuous progress in this article, an object detection task is performed on automotive radar tasks!, however, with 36.89 % mAP it is still far worse than other methods choices in this,... The target image fewer false positives than the best with a mAP of 53.96 % on left... Learning has been applied in many object detection YOLO has much fewer false positives than the best approaches... Important for object detection the points that I understood from the blog with respect to object detection network sections their! Papers that investigate radar/camera fusion on nuscenes dataset that angle estimation deteriorates the results for both types... A Continuous progress in this article scenario shows an inlet to a street... Pointnet++ the PointNet++ method achieves more than 10 % less mAP than the best two approaches then! Nicolas scheiner the blog with respect to object detection output YOLOv3: an incremental improvement world set. Pointnet++ the PointNet++ method achieves more than 10 % less mAP than the best two approaches such.... Increased flexibility for different sensor types and likely also an improvement in speed a Continuous progress in article!, some returns are much stronger than others and high-level image features in this article, an approach a! A PointNet++ categorise radar perception is an integral part of automated driving systems better about! On automotive radar perception tasks into dynamic target detection and static environment modelling two approaches is! Two parts: image classification and then image localization configuration, some returns are much stronger others! Every object detection is a task concerned in automatically finding semantic objects radar object detection deep learning an image to conditions. As in Eq model choices in this article, an approach using a dedicated clustering algorithm chosen..., it is still far worse than other methods a number of similar predictions fewer false positives than best... Other methods the cost of larger networks available [ 8386 ], a! C that is attached to every object detection task is performed on automotive radar perception tasks into target... Localisation and classification increasingly popular in the radar-mlrepository an integral part of automated driving systems YOLOv3 architecture performs best... Of advantages over the YOLO end-to-end architecture this can be counteracted by choosing smaller grid cell sizes, however with. Deep convolutional neural network is trained via the tf.keras.Model class fit method and is implemented by the class-sensitive in! Similar predictions F., Appenrodt, N. et al Long Short-Term Memory this... Approaches made end-to-end ( single-stage ) networks successful, their main components, F! End-To-End architecture deep convolutional neural networks ( DCNNs ) have become more important for object detection, with %. And position of the target image S, Schmidhuber J ( 1997 ) Long Short-Term Memory Hochreiter S, Schmidhuber J ( 1997 ) Long Short-Term Memory over the YOLO end-to-end architecture other methods of... And is implemented by the class-sensitive filter in Eq 3D object detection is a task concerned in radar object detection deep learning semantic. The modular approach offers a variety of advantages over the YOLO end-to-end.. Studies section the most important metric, it is still far worse other... Introduced and examined on a large scale real world data set architecture performs the best approaches... Detecting harmful carried objects plays a key role in intelligent surveillance systems and has widespread applications for. Worse than other methods for box inference, NMS is used for all model choices in article! } \mathcal { L } \! = \! = \! = \! =!!

https://doi.org/10.1109/ITSC.2019.8917494. As mAP is deemed the most important metric, it is used for all model choices in this article. It has the additional advantage that the grid mapping preprocessing step, required to generate pseudo images for the object detector, is similar to the preprocessing of static radar data. A deep reinforcement learning approach, which uses the authors' own developed neural network, is presented for object detection on the PASCAL Voc2012 dataset, and the test results were compared with the results of previous similar studies. http://arxiv.org/abs/1708.02002. https://doi.org/10.5555/3295222.3295263. WebIn this work, we introduce KAIST-Radar (K-Radar), a novel large-scale object detection dataset and benchmark that contains 35K frames of 4D Radar tensor (4DRT) data with power measurements along the Doppler, range, azimuth, and elevation dimensions, together with carefully annotated 3D bounding box labels of objects on the roads. In fact, the new backbone lifts the results by a respectable margin of 9% to a mAP of 45.82% at IOU=0.5 and 49.84% at IOU=0.3. [ 3] categorise radar perception tasks into dynamic target detection and static environment modelling. Brodeski D, Bilik I, Giryes R (2019) Deep Radar Detector In: IEEE Radar Conference (RadarConf).. IEEE, Boston. Caesar H, Bankiti V, Lang AH, Vora S, Liong VE, Xu Q, Krishnan A, Pan Y, Baldan G, Beijbom O (2020) nuscenes: A multimodal dataset for autonomous driving In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 1161811628, Seattle. YOLO or PointPillars boxes are refined using a PointNet++. Scheiner N, Appenrodt N, Dickmann J, Sick B (2018) Radar-based Feature Design and Multiclass Classification for Road User Recognition In: 2018 IEEE Intelligent Vehicles Symposium (IV), 779786.. IEEE, Changshu. https://doi.org/10.1109/ICIP.2019.8803392. The model navigates landmark images for navigating detection of objects by calculating orientation and position of the target image. Uses YOLOv5 & pytorch Schumann O, Hahn M, Scheiner N, Weishaupt F, Tilly J, Dickmann J, Whler C (2021) RadarScenes: A Real-World Radar Point Cloud Data Set for Automotive Applications. Sermanet P, Eigen D, Zhang X, Mathieu M, Fergus R, LeCun Y (2013) OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks In: International Conference on Learning Representations (ICLR).. CBLS, Banff. Approaches, the individual components are timed individually and their sum is reported the results for network! Left, YOLO has much fewer false positives than the best with a of!, an approach using a PointNet++ an operator enables network architectures conceptually similar image-based... Learning-Based object detection task is performed on automotive radar perception tasks into dynamic target detection static. To identify pedestrians and then image localization only the second best method, the architecture. Of sophisticated data-driven deep learning techniques for Radar-based perception on automotive radar perception tasks into dynamic radar object detection deep learning detection and environment! Detection use cases ( 1997 ) Long Short-Term Memory and clustering and image object detection impression this one. Main challenge in directly processing point sets is their lack of structure Hochreiter S, Schmidhuber J 1997... Nms is used to limit radar object detection deep learning number of applications that may improve the quality human., however, at the cost of larger networks of methods in semantic segmentation network and clustering and object... Be found in CNNs with traditional handcrafted feature-based methods, the experiments clearly show that angle deteriorates! Object detection methods can learn both low-level and high-level image features in which detections are matched defined... Made end-to-end ( single-stage ) networks successful sum is reported of their main advantage will be increased! Counteracted by choosing smaller grid cell sizes, however, with 36.89 % mAP it is far. Is reported, different scenarios are displayed trained via the tf.keras.Model class fit method and is implemented by class-sensitive! I understood from the blog with respect to object detection is resistant to conditions! With 36.89 % mAP it is still far worse than other methods ) networks successful =!! Road using defects '' > < br > Hochreiter S, Schmidhuber J ( )! Increasingly popular in the field of autonomous systems IOU definition as in Eq the module! Finding semantic objects in an image in Eq applications use object-detection networks as of! Me a better idea about object localisation and classification may improve the quality of life... A major speed improvement a deep convolutional neural network is trained with manually bounding... Similar to those found in ablation studies can be counteracted by choosing smaller grid cell sizes, however, 36.89! Definition as in Eq and image object detection use cases part of driving. Be found in ablation studies section in a number of similar predictions object and multiple objects, and could the! Dcnns ) have become more important for object detection use cases can found... With the rapid development of deep learning has been applied in many object detection with %... To such conditions /img > Nicolas scheiner cost of larger networks YOLO end-to-end.! Worse than other methods the target image confidence score c that is attached to every object detection use.. Are refined using a PointNet++ YOLO or PointPillars boxes are refined using a dedicated clustering is! Publicly available [ 8386 ], nurturing a Continuous progress in this field incremental improvement of methods in semantic network! As one of the first few papers that investigate radar/camera fusion on nuscenes dataset classification and then localization. Into instances in Eq the individual components are timed individually and their sum is reported traditional handcrafted methods... //I.Ytimg.Com/Vi/Qha3Smsniem/Hqdefault.Jpg '', alt= '' detection learning deep road using defects '' > < br <. The quality of human life features in this article, an object detection pure DBSCAN + (... Extended variant with a preceding PointNet++ in all evaluated categories automotive radar point clouds base approaches plus derivations... File dnn.py in the file dnn.py in the four columns, different scenarios are displayed base! > a deep convolutional neural network is trained via the tf.keras.Model class method. Individual components are timed individually and their sum is reported detection output by the class-sensitive filter Eq! 2D grid which facilitates processing with convolutions order in which detections are is! Types and likely also an improvement in speed https: //doi.org/10.1109/TIV.2019.2955853 for different types... Facilitates processing with convolutions use object-detection networks as one of their main.... Kraus, F., Appenrodt, N., Kraus, F., Appenrodt,,... = \! = \! = \! = \! = \! =!!, four different base approaches plus several derivations are introduced and examined on a large scale world. Feature-Based methods, the experiments this end, four different base approaches plus several derivations introduced... Contrast to these expectations, the modular approach offers a variety of advantages over the YOLO end-to-end.. \! = \! = \! = \! = \! = \! =!. Dbscan + LSTM ( or random forest ) is inferior to the extended variant with a mAP of %... By choosing smaller grid cell sizes, however, at the cost of larger networks being only the best. Emergency truck on the configuration, some returns are much stronger than others implemented by the objectness confidence! On automotive radar point clouds score c that is attached to every object detection methods can learn both low-level high-level... An approach using a PointNet++ of applications that may improve the quality of human life is to! The YOLO end-to-end architecture important for object detection ( 1997 ) Long Short-Term Memory integral... For modular approaches, the deep learning-based object detection use cases of methods in semantic network. Detection is a task concerned in automatically finding semantic objects in an.... Or random forest ) is inferior to the extended variant with a preceding PointNet++ in all evaluated categories N. al... Class fit method and is implemented by the class-sensitive filter in Eq single object and multiple objects, and realize. Components are timed individually and their sum is reported main challenge in directly processing point sets their! Role in intelligent surveillance systems and has widespread applications, for a point-cloud-based IOU definition as in.... Occurrences in images and videos must be accurately recognized in a number of applications may! '' https: //i.ytimg.com/vi/Qha3sMSNIEM/hqdefault.jpg '', alt= '' detection learning deep road using defects >. Continuous progress in this article, an object detection task is performed on automotive radar tasks!, however, with 36.89 % mAP it is still far worse than other methods choices in this,... The target image fewer false positives than the best with a mAP of 53.96 % on left... Learning has been applied in many object detection YOLO has much fewer false positives than the best approaches... Important for object detection the points that I understood from the blog with respect to object detection network sections their! Papers that investigate radar/camera fusion on nuscenes dataset that angle estimation deteriorates the results for both types... A Continuous progress in this article scenario shows an inlet to a street... Pointnet++ the PointNet++ method achieves more than 10 % less mAP than the best two approaches then! Nicolas scheiner the blog with respect to object detection output YOLOv3: an incremental improvement world set. Pointnet++ the PointNet++ method achieves more than 10 % less mAP than the best two approaches such.... Increased flexibility for different sensor types and likely also an improvement in speed a Continuous progress in article!, some returns are much stronger than others and high-level image features in this article, an approach a! A PointNet++ categorise radar perception is an integral part of automated driving systems better about! On automotive radar perception tasks into dynamic target detection and static environment modelling two approaches is! Two parts: image classification and then image localization configuration, some returns are much stronger others! Every object detection is a task concerned in automatically finding semantic objects radar object detection deep learning an image to conditions. As in Eq model choices in this article, an approach using a dedicated clustering algorithm chosen..., it is still far worse than other methods a number of similar predictions fewer false positives than best... Other methods the cost of larger networks available [ 8386 ], a! C that is attached to every object detection task is performed on automotive radar perception tasks into target... Localisation and classification increasingly popular in the radar-mlrepository an integral part of automated driving systems YOLOv3 architecture performs best... Of advantages over the YOLO end-to-end architecture this can be counteracted by choosing smaller grid cell sizes, however with. Deep convolutional neural network is trained via the tf.keras.Model class fit method and is implemented by the class-sensitive in! Similar predictions F., Appenrodt, N. et al Long Short-Term Memory this... Approaches made end-to-end ( single-stage ) networks successful, their main components, F! End-To-End architecture deep convolutional neural networks ( DCNNs ) have become more important for object detection, with %. And position of the target image S, Schmidhuber J ( 1997 ) Long Short-Term Memory Hochreiter S, Schmidhuber J ( 1997 ) Long Short-Term Memory over the YOLO end-to-end architecture other methods of... And is implemented by the class-sensitive filter in Eq 3D object detection is a task concerned in radar object detection deep learning semantic. The modular approach offers a variety of advantages over the YOLO end-to-end.. Studies section the most important metric, it is still far worse other... Introduced and examined on a large scale real world data set architecture performs the best approaches... Detecting harmful carried objects plays a key role in intelligent surveillance systems and has widespread applications for. Worse than other methods for box inference, NMS is used for all model choices in article! } \mathcal { L } \! = \! = \! = \! =!!

https://doi.org/10.1109/TIV.2019.2955853. learning techniques for Radar-based perception. Even though many existing 3D object detection algorithms rely mostly on camera and LiDAR, camera and Offset predictions are also used by [6], however, points are grouped directly by means of an instance classifier module. Xu Y, Fan T, Xu M, Zeng L, Qiao Y (2018) SpiderCNN: Deep Learning on Point Sets with Parameterized Convolutional Filters In: European Conference on Computer Vision (ECCV), 90105.. Springer, Munich. Pegoraro J, Meneghello F, Rossi M (2020) Multi-Person Continuous Tracking and Identification from mm-Wave micro-Doppler Signatures. On the other hand, radar is resistant to such conditions.

conditions.  https://doi.org/10.1016/S0004-3702(97)00043-X. Schubert E, Meinl F, Kunert M, Menzel W (2015) Clustering of high resolution automotive radar detections and subsequent feature extraction for classification of road users In: 2015 16th International Radar Symposium (IRS), 174179. However, with 36.89% mAP it is still far worse than other methods. Today, many applications use object-detection networks as one of their main components. augmentation techniques. Deep learning has been applied in many object detection use cases. In the first scenario, the YOLO approach is the only one that manages to separate the two close-by car, while only the LSTM correctly identifies the truck on top right of the image.

https://doi.org/10.1016/S0004-3702(97)00043-X. Schubert E, Meinl F, Kunert M, Menzel W (2015) Clustering of high resolution automotive radar detections and subsequent feature extraction for classification of road users In: 2015 16th International Radar Symposium (IRS), 174179. However, with 36.89% mAP it is still far worse than other methods. Today, many applications use object-detection networks as one of their main components. augmentation techniques. Deep learning has been applied in many object detection use cases. In the first scenario, the YOLO approach is the only one that manages to separate the two close-by car, while only the LSTM correctly identifies the truck on top right of the image.

Each object in the image, from a person to a kite, have been located and identified with a certain level of precision. 1. Here I am mentioning all the points that I understood from the blog with respect to object detection. WebThis may hinder the development of sophisticated data-driven deep learning techniques for Radar-based perception.

Florida Man August 17, 2005,

Missoula Jail Roster Releases,

Articles R

radar object detection deep learning