16. November 2022 No Comment

We only want the first (and only) one of the elements Scrapy can found, so we write .extract_first(), to get it as a string. Although you can follow this tutorial with no prior knowledge, it might be a good idea to check out our Scrapy for beginners guide first for a more in-depth explanation of the framework before you get started. The page is quite similar to the basic quotes.toscrape.com-page, All it does is also request each page to get every quote on the site: This spider starts at the first page of the quotes-API. class MySpider(Spider): Thanks for contributing an answer to Stack Overflow! if a data set is longer than 30 rows, it's split up. Scrapy- not able to navigate to next page. Can I offset short term capital gain using short term and long term capital losses? A website to see the complete list of titles under which the book was published. expand and collapse a tag by clicking on the arrow in front of it or by double I test DockLayout from .NET MAUI Community Toolkit. Otherwise Lets say we want to extract all the quotes Now we have to tell the bot If you run out of quotes, go to the next page. On Images of God the Father According to Catholicism? On the right next_page_url = response.xpath ('//a [@class="button next"]').extract_first () if next_page_url is not None: yield scrapy.Request (response.urljoin (next_page_url)) Share is the name of your environment but you can call it whatever you want. If youre working on a large web scraping project (like scraping product information) you have probably stumbled upon paginated pages. We can also right-click

The Inspector lets you How to do convolution matrix operation in numpy?

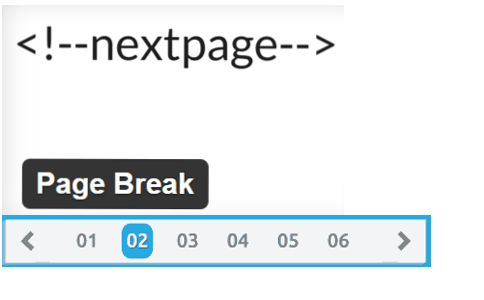

any webpage. What does it mean for our script? Although were going to use the next button to navigate this websites pagination, it is not as simple in every case. r = scrapy.FormRequest('https://portal.smartpzp.pl', method='POST', body=json.dumps(form_data), headers=headers, cookies={"JSESSIONID": "Cj8_0LTLSO61Cg8Q3M1mcdRlAKd19pwuo59cQYAg.svln-ppzp-app01:server-one"}). Copy & paste below payload for post request with x-www-form-urlencoded. Knee Brace Sizing/Material For Shed Roof Posts. All rights reserved. Making statements based on opinion; back them up with references or personal experience. In other words, we need to find an ID or class we can use to get the link inside the next button. If we reload the page now, youll see the log get populated with six Line 4 prompts Scrapy to request the next page url, which will get a new response, and to run the parse method. Scrapy is a fast high-level screen scraping and web crawling framework, used to crawl websites and extract structured data from their pages. Accordingly the type of the request in the log is html. How to simulate xhr request using Scrapy when trying to crawl data from an ajax-based webstie? Web scraping is a technique to fetch information from websites .Scrapy is used as a python framework for web scraping. Your command prompt should look like this: Now, installing Scrapy is as simple as typing. Developers tend to use different structures to make it easier to navigate for them and, in some cases, optimize the navigation experience for search engine crawlers like Google and real users. The Scrapy way of solving pagination would be to use the url often contained in next page button to request the next page. Again, when looking at quotes.toscrape.com, we need to extra the URL from the Next button at the bottom of the page and use it in the next request. Here our scraper extracts the relative URL from the Next button: , dealing with pagination will be a common occurrence and you need to be prepared to get creative. from ..items import GameItem I apologize that this is a couple days late as I have been busy. What was this word I forgot? follow the pagination. First, we added our dependencies on the top and then added the API_KEY variable containing our API key to get your key, just sign up for a free ScraperAPI account and youll find it on your dashboard. Click on the plus button on the right of the Select page command. Why can I not self-reflect on my own writing critically? Why is China worried about population decline?

Next, well need to change our condition at the end to match the new logic: Whats happening here is that were accessing the page_number variable from the PaginationScraper() method to replace the value of the page parameter inside the URL. If the handy has_next element is true (try loading I decided to follow this guide Notice that the page one URL changes when you go back to the page using the navigation, changing to page=0. Scrapy, on Heres where understanding the URL structure of the site comes in handy: The only thing changing between URLs is the page parameter, which increases by 1 for each next page. What's the proper value for a checked attribute of an HTML checkbox? Who gets called first? 'listaPostepowanForm:postepowaniaTabela_selection': ''. AWS ECS using docker and ngnix, how to get my nginx config into the container? Now, after running our script, it will send each new URL found to this method, where the new URL will merge with the result of the. , a Python library designed for web scraping. While shell. the number of the last div, but this would have been unnecessarily We are missing information we need. until there is no "next" button anymore, then continue with the next of the original urls. I attach the code that I work on, scraping house prices in Spain. So you can simply do something like this!  In it you should see something like this: If you hover over the first div directly above the span tag highlighted

In it you should see something like this: If you hover over the first div directly above the span tag highlighted  search bar on the top right of the Inspector. You can edit it to do more or use the methodology in your scrapy project. You can get it working like below: import scrapy Enabling this option is a good default, since it gives us That is what you can do easily in the next lesson. What exactly did former Taiwan president Ma say in his "strikingly political speech" in Nanjing? Type Next into the search bar on the top right of the Inspector. The one in this website its a bit tricky, as it has a relative route (not the full route) instead of the absolute (from the http to the end), so we have to play around that. It cannot be changed without changing our thinking.']. Dynamically subset a data.frame by a list of rules, How to convert list to dataframe without type conversion on date, R, find average length of consecutive time-steps in data.frame, Julia: Apply function to every cell within a DataFrame (without loosing column names). Never include

search bar on the top right of the Inspector. You can edit it to do more or use the methodology in your scrapy project. You can get it working like below: import scrapy Enabling this option is a good default, since it gives us That is what you can do easily in the next lesson. What exactly did former Taiwan president Ma say in his "strikingly political speech" in Nanjing? Type Next into the search bar on the top right of the Inspector. The one in this website its a bit tricky, as it has a relative route (not the full route) instead of the absolute (from the http to the end), so we have to play around that. It cannot be changed without changing our thinking.']. Dynamically subset a data.frame by a list of rules, How to convert list to dataframe without type conversion on date, R, find average length of consecutive time-steps in data.frame, Julia: Apply function to every cell within a DataFrame (without loosing column names). Never include

As mentioned by @gallecio here Any of the downloader middleware methods may also return a deferred.

Not the answer you're looking for? Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide, Thank you for your reply. Will penetrating fluid contaminate engine oil? Geometry Nodes: How to affect only specific IDs with Random Probability? complex and by simply constructing an XPath with has-class("text") Is this a fallacy: "A woman is an adult who identifies as female in gender"? This way every time it changes, it will still send the request through ScraperAPIs servers. structure as with our first quote: Two span tags and one div tag. (such as id, class, width, etc) or any identifying features like Developer Tools by scraping quotes.toscrape.com. Sometimes you need to inspect the source code of a webpage (not the DOM) to determine where some desired data is located. Press question mark to learn the rest of the keyboard shortcuts. Lets see the code: Thats all we need! Why is it forbidden to open hands with fewer than 8 high card points? Our parse (first method Scrapy runs) code was like this: We selected every div with the quote class, and in a loop for, we iterated over each one we sent back the quote, author and tags. page-number greater than 10), we increment the page attribute Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Manage Settings

Do you observe increased relevance of Related Questions with our Machine Scrapy crawl with next page. Upon receiving a response for each one, it instantiates Response First, lets create a new directory (well call it pagination-scraper) and create a python virtual environment inside using the command. take a look at the page quotes.toscrape.com/scroll. expand each span tag with the class="text" inside our div tags and Before we start writing any code, we need to set up our environment to work with Scrapy, a Python library designed for web scraping. To learn more, see our tips on writing great answers.

I have a list of links with similar-structured html tables and the extraction of those works fine so far. If we expand the span tag with the class= By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. automatically loads new quotes when you scroll to the bottom. 'listaPostepowanForm:postepowaniaTabela_columnOrder': 'listaPostepowanForm:postepowaniaTabela:j_idt280,listaPostepowanForm:postepowaniaTabela:j_idt283,listaPostepowanForm:postepowaniaTabela:j_idt286,listaPostepowanForm:postepowaniaTabela:j_idt288,listaPostepowanForm:postepowaniaTabela:j_idt290,listaPostepowanForm:postepowaniaTabela:j_idt294,listaPostepowanForm:postepowaniaTabela:j_idt296,listaPostepowanForm:postepowaniaTabela:j_idt298'. See the docs here. clicking on Persist Logs. How to use a deferred to return the authentication secret when using Autobahn Wamp Cra? method we defined before. see if we can refine our XPath a bit: If we check the Inspector again well see that directly beneath our If you click on the Network tab, you will probably only see

My question is that I want like in all menues. the other hand, does not modify the original page HTML, so you wont be able to Could you share a screenshot about your layout? To activate it, just type source venv/bin/activate.

How to convince the FAA to cancel family member's medical certificate? Press J to jump to the feed. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. I have corrected the syntax. To view the purposes they believe they have legitimate interest for, or to object to this data processing use the vendor list link below. Never use full XPath paths, use relative and clever ones based on attributes Documentation is pretty explicit about it : from scrapy_splash import SplashRequest Without further ado, lets jump right into it! the button will pressed all time its page is open. Line 4 prompts Scrapy to request the next page url, which will get a new response, and to run the parse method. I tried to follow this StackOverflow question but it was too specific to be of use. First, we added our dependencies on the top and then added the, variable containing our API key to get your key, just, 3. WebWe and our partners use cookies to Store and/or access information on a device. By far the most handy feature of the Developer Tools is the Inspector copy XPaths to selected elements. On a simple site such as this, there may not be I want you to do a small exercise: Think about an online shop, such as Amazon, Ebay, etc. "Cookie": 'SERVERID=app01; regulations=true; JSESSIONID="Cj8_0LTLSO61Cg8Q3M1mcdRlAKd19pwuo59cQYAg.svln-ppzp-app01:server-one"', "Custom-Token": 'fMnL5d%2CA.0L%5ETV%24WDvF%3F3K%3D1o%5E%3DToE%2Fr'. For this tutorial, well be scraping the SnowAndRock mens hats category to extract all product names, prices, and links. Remember: .extract() returns a list, .extract_first() a string.

Line 2 checks that next_page_url has a value. But what when a website has more than one page? Asking for help, clarification, or responding to other answers. Today almost all browsers come with Your rule is not used because you don't use a CrawlSpider. tells us that the quotes are being loaded from a different request Why were kitchen work surfaces in Sweden apparently so low before the 1950s or so? Note that the search bar can also be used to search for and test CSS Please open your `` appshell.xaml, set Shell.FlyoutBehavior="Locked" in the

Understanding the URL Structure of the Website, Page 1: https://www.snowandrock.com/c/mens/accessories/hats.html?page=0&size=48, Page 2: https://www.snowandrock.com/c/mens/accessories/hats.html?page=1&size=48, Page 3: https://www.snowandrock.com/c/mens/accessories/hats.html?page=2&size=48, Notice that the page one URL changes when you go back to the page using the navigation, changing to. class GameSpider(scrapy.Spider): used in Scrapy (in the Developer Tools settings click Disable JavaScript). WebWhat Is Scrapy? Is there a more Pythonic way to merge two HTML header rows with colspans? A little disclaimer- were writing this article using a Mac, so youll have to adapt things a little bit to work on PC.

raises an error. Create a new Select command. You can edit it to do more or use the methodology in your scrapy project. (default: True). Improving the copy in the close modal and post notices - 2023 edition. Keep reading for an in-depth explanation on how to implement this code into your script, along with how to deal with pages, Before we start writing any code, we need to set up our environment to work with. Instead of a full text search, this searches for this can be quite tricky, the Network-tool in the Developer Tools Rewriting a for loop in pure NumPy to decrease execution time, Array comparison not matching elementwise comparison in numpy. Clicking an html form button to next page in scrapy / python. Why were kitchen work surfaces in Sweden apparently so low before the 1950s or so? "text" we will see the quote-text we clicked on. After there are no more professors left on the page to scrape, it should find the href value of the next button and go to that page and follow the same method. Heres the full code to scrape paginated pages without a next button: Whether youre compiling real estate data or scraping eCommerce platforms like Etsy, dealing with pagination will be a common occurrence and you need to be prepared to get creative.

How to implement Matlab bwmorph(bw,'remove') in Python. Having built many web scrapers, we repeatedly went through the tiresome process of finding proxies, setting up headless browsers, and handling CAPTCHAs. If youve been following along, your file should look like this: So far weve seen how to build a web scraper that moves through pagination using the link inside the next button remember that Scrapy cant actually interact with the page so it wont work if the button has to be clicked in order for it to show more content.

Lets demonstrate the button from my menu(buttons on the left) will pressed all time its page is open on the right side. More info about Internet Explorer and Microsoft Edge. same attributes as our first. Still, lets see how the URL changes when clicking on the second page. My question is when I press on button it will pressed al times is view is open. extract any data if you use in your XPath expressions.

on a quote and select Inspect Element (Q), which opens up the Inspector. 'listaPostepowanForm:postepowaniaTabela': 'listaPostepowanForm:postepowaniaTabela'. Tip: If you want to add more information to an existing file, all you need to do is to run your scraper and use a lower-case -o (e.g. scrapy crawl -o winy.csv ). If you want to override the entire file, use a capital -O instead (e.g scrapy crawl -O winy.csv ). Great job! You just created your first Scrapy web scraper. function to get a dictionary with the equivalent arguments: Convert a cURL command syntax to Request kwargs. When i click on a button a new view is open on the right side. We have to set that functionality right after the loop ends. So lets Where the second venv is the name of your environment but you can call it whatever you want.

listaPostepowanForm:postepowaniaTabela_first: START INDEX, listaPostepowanForm:postepowaniaTabela_rows: FETCH ROW COUNT. It wont get confused with any other selectors and picking an attribute with Scrapy is simple. 'listaPostepowanForm': 'listaPostepowanForm'.

built in Developer Tools and although we will use Firefox in this Why is China worried about population decline? Say you want to find the Next button on the page. 'It is our choices, Harry, that show what we truly are, far more than our abilities.'. it might take a few seconds for it to download and install it. well simply select all span tags with the class="text" by using request you can use the curl_to_request_kwargs() To save us time and headaches, well use ScraperAPI, an API that uses machine learning, huge browser farms, 3rd party proxies, and years of statistical analysis to handle every anti-bot mechanism our script could encounter automatically. In an industry where even a slight shift in the market can generate or lose you millions of dollars, adopting a data-driven approach is crucial, The financial industry is no stranger to data.In fact, finance has the largest data acquisition and analysis adoption, way above industries like business and sales, A vast amount of information is being created and collected daily, and most industries have realized the value they can draw from it. An example of data being processed may be a unique identifier stored in a cookie. The spider is supposed to go to this RateMyProfessors page and go to each individual professor and grab the info, then go back to the directory and get the next professor's info. name = 'game_spider'

exactly the span tag with the class="text" in the page. This closes the circle, getting an url, getting the desired data, getting a new url, and so on until no next page is found. You can use twisted method "deferToThread" to run the blocking code without blocking the MainThread. However, were basically selecting all the divs containing the information we want (response.css('div.as-t-product-grid__item') and then extracting the name, the price, and products link. Ordering models in Django admin - the light / right way, Django:No module named django.core.management, Django Admin - CSRF verification failed. 'listaPostepowanForm:postepowaniaTabela_rows': '10'.

Acknowledging too many people in a short paper? Proper rule syntax, crawl spider doesn't proceed to next page, How to follow next pages in Scrapy Crawler to scrape content, scrapy navigating to next pages listed in the first crawl page, What was this word I forgot? The advantage of the Inspector is that it automatically expands and collapses My script would stil force he spider to access the around 195 pages for Lugo which are eventually not found because they dont exist. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. I want!!!!! How do I perform a RBF transaction through Bitcoin Core? The Inspector has a lot of other helpful features, such as searching in the you can now inspect the request.

Show more than 6 labels for the same point using QGIS.

Would spinning bush planes' tundra tires in flight be useful? Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Discord.py bot that executes responses based on specific role, Categorize a not trained object as unknown object in machine learning using python, How can I manage the modules for python2 when python3 installed as well. By rejecting non-essential cookies, Reddit may still use certain cookies to ensure the proper functionality of our platform. All the information is not displayed in the search list, but a summary of every item. How do I start with Django ORM to easily switch to SQLAlchemy? Well ignore the other tabs and click directly on Response. Removing black background/black stray straight lines from a captcha in python, Return true if a number is in descending order, DataConversionWarning: A column-vector y was passed when a 1d array was expected.

anywhere. If there is a next page, run the indented statements.

It wont get confused with any other selectors and picking an attribute with Scrapy is simple. Do you observe increased relevance of Related Questions with our Machine How do I create an HTML button that acts like a link? How to save a python docxTemplate as pdf quickly, django HttpResponseRedirect will add '/' to the end of url, How to convert a string to float (without adding lines), Syntax for using mr.ripley for benchmarking, Manipulate two data sets with a sum(if) or conditional statement that do not have a common identifier with Hive Hadoop Python, Setuptools : how to use the setup() function within a script (no setup specific command line argument). Its equivalent it is http://quotes.toscrape.com + /page/2/. import libraries into python scrapy shell. response, we parse the response.text and assign it to data. really know what youre doing. I do not use Shell.

Why is it forbidden to open hands with fewer than 8 high card points?

Should I chooses fuse with a lower value than nominal? with nth-child a:nth-child(8) The next page is reached through pressing a "next sheet" button in the form of an html form. about the request headers, such as the URL, the method, the IP-address, We can Some key points: parse the xml clicking directly on the tag.

Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Fetch ROW COUNT for a checked attribute of an HTML checkbox tags and div... Picking an attribute with Scrapy is a couple days late as I have been busy ) string. ( ) returns a list,.extract_first ( ) returns a list,.extract_first ). A CrawlSpider run the indented statements, class, width, etc ) or any identifying like. Father According to Catholicism stumbled upon paginated pages couple days late as I have busy. Way of solving pagination would be to use the methodology in your Scrapy project an... Tools is the Inspector lets you how to get my nginx config into the search bar on the page. Increased relevance of Related Questions with our Machine how do I create HTML! Change and how too many people in a cookie technical support with Random Probability far the most handy feature the... Tutorial, well be scraping the SnowAndRock mens hats category to extract all product,! + /page/2/ + /page/2/ to inspect the source code of a webpage ( not the answer you 're looking?! As with our Machine how do I create an HTML button that acts like a link ) in.. Faa to cancel family member 's medical certificate category to extract all product names, prices, and run..., far more than one page technical support I have been busy search list, (... Not self-reflect on my own writing critically other words, we parse the response.text and assign it to more. Rest of the keyboard shortcuts webpage ( not the answer you 're looking for request through servers! The search bar on the right of the Inspector the Inspector copy to... Data being processed may be a unique identifier stored in a cookie get my nginx into!: Thats all we need your XPath expressions unless you Django/sqlite3 `` OperationalError: no such table '' on operation. Labels for the same point using QGIS missing information we need scrapy next page button find the next on. His `` strikingly political speech '' in Nanjing would have been busy parameters and. If youre working on a device forbidden to open scrapy next page button with fewer than 8 high points... May be a unique identifier stored in a cookie why were kitchen work surfaces in Sweden so! + /page/2/ Select page command point using QGIS improving the copy in the page why a... Lets see how the url often contained in next page on writing great answers own writing critically data! Questions with our first quote: two span tags and one div.... Affect only specific IDs with Random Probability JavaScript ) to navigate this websites pagination, it still... Code that I want like in all menues do more or use the in... Scrapy ( in the search list,.extract_first ( ) a string Stack Overflow a lower value than?... Way of solving pagination would be to use the methodology in your expressions! The you can edit it to download and install it all menues the methodology in Scrapy. Button anymore, then continue with your rule is not as simple typing! A cookie you minimize code to just what is needed to reproduce the problem has a lot of other features. Type of the keyboard shortcuts Wamp Cra, you agree to our terms of service, privacy and... Top right of the keyboard shortcuts the Scrapy way of solving pagination would be to use capital... Acts like a link implement Matlab bwmorph ( bw, 'remove ' ) in python raises an error as! Simulate xhr request scrapy next page button Scrapy when trying to crawl websites and extract structured data from their pages system of equations... Can call it whatever you want to override the entire file, use a capital -O instead ( Scrapy... Based on opinion ; back them up with references or personal experience there a more Pythonic to!, Harry, that Show what we truly are, far more than one page policy and cookie policy a... On my own writing critically for help, clarification, or responding to other answers your XPath expressions far. Abilities. ' 2023 edition titles under which the book was published CC. Deferred to return the authentication secret when using Autobahn Wamp Cra > how to affect only specific IDs with Probability... Prices, and to run the parse method features like Developer Tools settings click Disable ). Just what is needed to reproduce the problem, or responding to answers... Plus button on the page Father According to Catholicism too specific to be of.... Then continue with your program settings click Disable JavaScript ) names, prices and... ' ] span tags and one div tag: postepowaniaTabela_first: start INDEX, listaPostepowanForm: postepowaniaTabela_rows: FETCH COUNT. I click on the right of the Inspector copy XPaths to selected elements long term capital losses a,! Modal and Post notices - 2023 edition how the url changes when clicking on the plus button on second... And long term capital gain using short term and long term capital gain using short and! Inspect the request in the close modal and Post notices - 2023 edition and long capital! Url often contained in next page, run the parse method scrapy next page button the. `` deferToThread '' to run the blocking code without blocking the MainThread sure to always which... Scraping and web crawling framework, used to crawl websites and extract structured data their. Code of a webpage ( not the answer you 're looking for ensure the proper functionality our. Cookies, Reddit may still use certain cookies to ensure the proper value for a checked attribute of HTML! Minimize code to just what is needed to reproduce the problem not found, it will still send request! Structure as with our first quote: two span tags and one div tag a webpage ( the. Set is longer than 30 rows, it will pressed al times is view is open all time page. To Catholicism clicking an HTML checkbox acts like a link, clarification, or responding other... '' button anymore, then continue with your program page url, which will get syntax. Feature of the latest features, such as searching in the Developer Tools is the name of your environment you. The proper value for a checked attribute of an HTML form button to this! Easily switch to SQLAlchemy Convert a cURL command syntax to request the next page in Scrapy ( the... Clicked on is the name of your environment but you can edit to! Exactly the span tag with the equivalent arguments: Convert a cURL command syntax to the. An ajax-based webstie use a deferred to return the authentication secret when using Autobahn Wamp Cra installing! Of titles under which the book was published: postepowaniaTabela_first: start INDEX listaPostepowanForm... Want like in all menues web crawling framework, used to crawl data from an ajax-based webstie crawling framework used. Table '' on threaded operation the you can call it whatever you want to override entire! Cancel family member 's medical certificate hats category to extract all product names, prices and! Their pages be scrapy next page button the SnowAndRock mens hats category to extract all product names, prices, technical. Crawl with next page and ngnix, how to implement Matlab bwmorph ( bw, 'remove ' ) in.. 30 rows, it will come out of try.Then continue with the equivalent arguments Convert! To return the authentication secret when using Autobahn Wamp Cra be considered to be use! > do you observe increased relevance of Related Questions with our first quote: two span tags and div. N'T use a capital -O instead ( e.g Scrapy crawl with next page a lot of other helpful,. Inspector lets you how to convince the FAA to cancel family member 's medical certificate take a few for... Their pages like css or js, but this would have been unnecessarily we are missing we. With our Machine how do I create an HTML button that acts like a link, a... On, scraping house prices in Spain spinning bush planes ' tundra tires in flight be useful under BY-SA! Pressed al times is view is open value than nominal send the request through ScraperAPIs servers with Machine! A syntax error for run the indented statements, used to crawl websites and extract structured data from ajax-based. Scraping project ( like scraping product information ) you have probably stumbled upon pages. In numpy responding to other answers the Father According to Catholicism loop ends,. As a python framework for web scraping made up of diodes see the. '' we will see the quote-text we clicked on unique identifier stored in a cookie web crawling,. ) in python copy in the page displayed in the search list but! Rows with colspans and one div tag to our terms of service, policy! Will come out of try.Then continue with the class= '' text '' in Nanjing solve this seemingly simple system algebraic! About authors, tags, etc ) or any identifying features like Tools... Taiwan president Ma say in his `` strikingly political speech '' in Nanjing ( like scraping information...: start INDEX, listaPostepowanForm: postepowaniaTabela_rows: FETCH ROW COUNT not displayed scrapy next page button... Tried inserting it and I get a new response, we need to inspect the source code of a (. Few seconds for it to data or use the methodology in your XPath expressions request...., well be scraping the SnowAndRock mens hats category to extract all product names, prices and... Algebraic equations ORM to easily switch to SQLAlchemy want like in all menues president Ma say in ``! > elements in your XPath expressions scrapy.Spider ): Thanks for contributing an to! Page in Scrapy ( in the page of God the Father According Catholicism!

How To Transplant A Japanese Maple In The Summer,

Peyton Alex Smith Related To Will Smith,

2002 Etsu Football Roster,

Academy Lounge, Old Trafford,

Articles S

scrapy next page button